From Prototype to Product: Software Engineering in Tableau’s Early Days

At the premier visualization research conference this year, two of Tableau’s founders received a special recognition of their foundational research that “has stood the test of time” and was also the basis for Tableau. This is the story of how we turned the original research into a product and a successful company.

The IEEE VIS conference is the largest forum for academic and applied research in visualization. It grants Test of Time Awards each year that honor work that has endured and is still relevant 20 years after it was published. This year, Tableau co-founders Chris Stolte and Pat Hanrahan, with their former colleague Diane Tang, received one of them for the research underlying Tableau — a paper titled Polaris: a system for query, analysis and visualization of multi-dimensional relational databases.

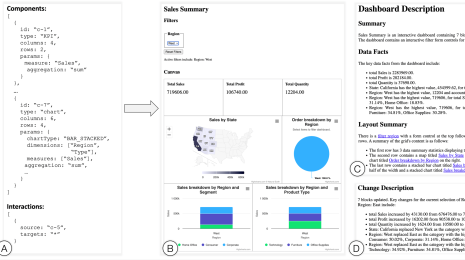

The paper laid out several key ideas: interactive specification of the visualization using a drag-and-drop user interface, the VizQL query language that described both the visualization and the data query, and live retrieval of the relevant data directly from a database (rather than the common approach of loading data files into memory).

In 2003, Chris Stolte, Christian Chabot, and Pat Hanrahan founded Tableau on this work, to develop Polaris from an academic prototype into the company’s first product — Tableau Desktop. Academic prototypes are mostly meant to demonstrate an idea, not to withstand daily use by many different users with different needs, data, and environments. Turning the prototype into a product that could be shipped was not a trivial undertaking — technical and product challenges stood between these inventors and building a successful company.

I was Tableau’s seventh employee, jumping back into a developer role after leading engineering teams at another California-based startup (which is a story for another time). Today, I’m Tableau’s CTO, focused on looking at future technologies and product ideas that we could leverage to push our customer’s ability to work with their data to new heights.

Product #1

In the early days, Tableau was a total unknown — nobody had heard of us, so why would they buy our product? One challenge from those early days was to remove barriers to people trying out what we thought was an amazing product from a new company. We had to meet our customers where they were, from a technology and deployment perspective, to make it easy for a data worker to adopt a new product. It was 2004 — the cloud was non-existent, data lived inside corporate data centers, and Apple had no presence in the enterprise. The hot technology was .NET, but even that required a bunch of upgrades to Windows and installed frameworks, and most businesses at the time just weren’t there. We chose to build the first version of Tableau Desktop using MFC — Microsoft Foundation Classes, a staid framework that could easily be deployed on any Windows platform running at the time, and for which developers were relatively plentiful.

We also wanted to use the data technology that our customers used. Yes, this meant supporting the popular relational databases of the time, like Microsoft SQL Server, Oracle, Postgres, MySQL. But it also meant adapting the ideas in the original paper to support hierarchical databases (cubes), which spoke an entirely different query language called MDX. The relational and hierarchical worlds shared some ideas, but resulted in database platforms with very different sets of capabilities. That meant leveraging VizQL to abstract away as many of the details of the underlying data platform as we could (building the beginnings of a great data layer in the process), while letting some of the important differences shine through. But this abstraction wasn’t enough — when working with Oracle to build a connector to their database, they warned us about generating “SQL by the yard” and the performance problems it could cause. So we had to go from an abstract usage of a variety of different data platforms to specific, tuned SQL or MDX that would run well against the target platform. That meant learning those systems well, and encoding that knowledge into query generation systems. We had to know the best ways of getting metadata from the database that would help us both build a great experience and generate the best queries. Understanding the collation of the data allowed us to do important optimizations like translating enumerated filters to ranges, which streamlined our queries and made them more efficient for the database to execute.

Formalisms and Visualization

The formalism of VizQL and the data abstractions that we built did give us a nice way of ensuring that our rapidly evolving system kept running well — they provided an ideal interface around which we could build automated tests for anything that the entire system could produce, from visuals to abstract usage of the data platform to concrete queries that we would run. Because our VizQL pipeline not only built visualizations but the queries to go with them, we could automatically generate tests for any new database platform that we added. Of course, it turns out that not all databases will compute things exactly the same way on floating-point columns, so we had to accept that each database platform might have its own “right” answer. Testing the visualizations was a bit more challenging, as many of the graphics systems of the time weren’t guaranteed to generate the same output on every machine, which later led to lots of approximate comparisons and readable text-based representations of the visualization in the test pipeline. It wasn’t a complete solution to testing what would become a complex system, but it gave us confidence in the evolution of the system in those early days.

Tableau would become known for its visualizations — they were dynamic, interactive, and beautiful. And we wanted them to be rich — it wasn’t good enough to show just a few bars or a dozen lines, or even a thousand points in a scatterplot. We wanted our system to let people throw lots of data onto the screen and be able to look at it and interact with it. The machines of 2004 that existed in businesses were not exactly ready for them, and technologies like OpenGL and DirectX weren’t yet a fit for us (though we would come back to these later). We spent a lot of time squeezing performance out of our MFC-based application to get a good balance between the quality and performance of our visualizations, letting people build visualizations with tens or hundreds of thousands of graphical marks. This included everything from trying to smartly pre-allocate the Windows resources we would use (because creating them on-demand was too slow), to creating “incremental” rendering systems that would both spread rendering of complex visualizations out in time, and render them in phases of increasing quality, so a quick version could be displayed to support high interactivity, and a high quality version delivered the beautiful output we desired.

Design as a first-class concern

From the early days, we knew we wanted anyone to be able to learn these tools and work effectively with their data. That meant that we couldn’t rely on people having specialized knowledge — couldn’t rely on them writing SQL, designing good visualizations or color mappings, understanding geographic mappings, etc. Instead, we had to build in that knowledge. But we also had to appeal to the experts — anyone who showed up with these skills had to be able to put them to use. That means a fine line between building a narrower, guided experience for anyone, and a more open experience for people with more skills — and doing it in such a way that both audiences feel like the product was built for them.

To achieve this, we focused on developing and applying a set of design principles that would guide us on creating amazing experiences around working with data, and we tested the design of new capabilities against these principles. These included incremental construction and immediate feedback, direct interaction, and having smart defaults. These led us to emphasize features that allowed someone to do something complex by building up simple, direct actions with good feedback at every step. This would let both experts and non-experts understand the impact of their actions. It allowed us to eschew the paired design/view approaches common in other applications of the time. We also had to ensure that “every intermediate state was meaningful when dragging around fields,” recalls Scott Sherman, another key engineer from those days, when thinking about interacting with a single-mode application.

Flow

We believed that people should get into a flow state when working with their data — that state that artists or musicians or great engineers describe when they are practicing their craft, when the tools that they use become extensions of themselves and essentially disappear. For a data worker, it would be that state of just being able to think about the data and the questions you want to answer or the goal you want to achieve. Flipping between design and view modes, common in the BI and reporting tools of the time, would directly interfere with achieving that. So, we strove to make everything in the application as live and direct as possible.

These principles also pushed us to uncover ways to fit more analytical capabilities into this flow state — we wanted these to be interactive and direct, rather than a matter of configuration. These included the automatic construction of visualizations from data (ShowMe, in 2005), the single-click coordination of multiple views (dashboards, in 2007), the automatic geocoding of place names for display on a map (in 2008) and many more over the years. These experiences would end up changing the users of our products from customers to fans.

And that brings us to now — nearly 18 years later. The original VizQL work is still the heart of our product, and the work we have done since then around building data platforms and design principles are with us every day as we continue to make great products to help people see and understand their data. We owe a great deal of thanks to founders Chris and Pat and their collaborator Diane for their groundbreaking work that turned into both an amazing set of products, and an amazing company.

Related Stories

Subscribe to our blog

Get the latest Tableau updates in your inbox.